Trust Nothing, Secure Everything: How to Build Zero Trust on AWS

veröffentlicht am 27.02.2025 von Jannis Schoormann

Legacy defences just aren't cutting it anymore. According to the 2024 IBM Cost of a Data Breach Report, the global average cost of a breach has skyrocketed to $4.88 million, marking a 10% jump over last year and the highest figure on record. Even more alarming, 1 in 3 breaches involved so-called "shadow data" information that organisations didn't even know they had. The good news? By using AI and automation in security, organisations saved an average of $2.22 million per breach.

Introduction

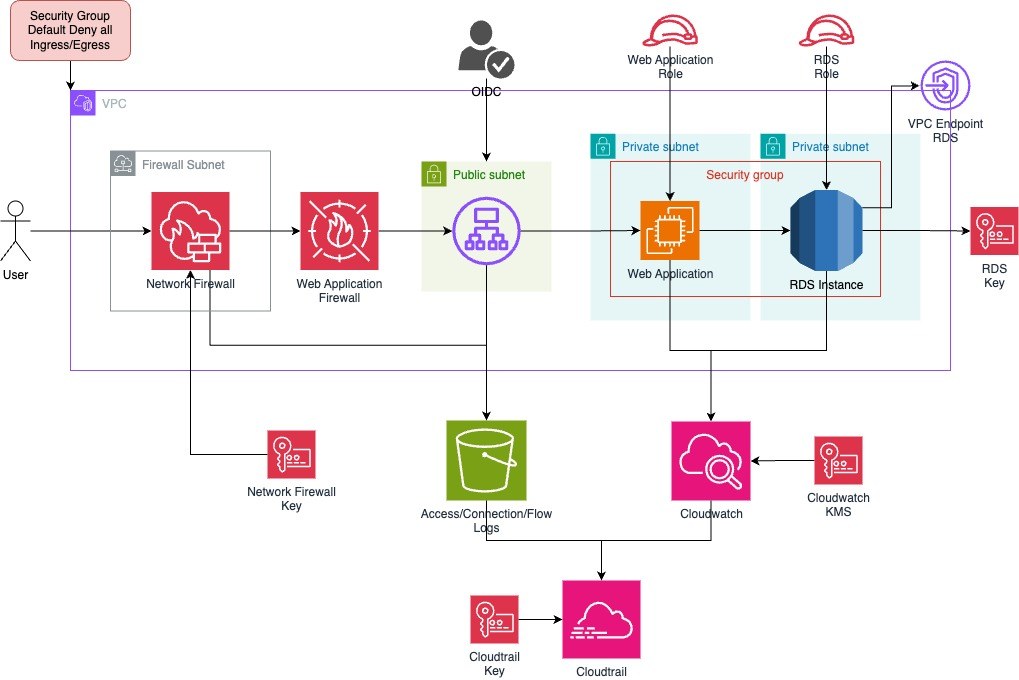

All of these numbers show us one really important thing: we need security strategies that go beyond traditional perimeter defences. That's where the Zero Trust model comes in, as it verifies every request, user and device instead of relying on implicit trust once someone is 'inside' the network. We're going to break down the five core principles of a Zero Trust architecture and show how to apply them in an AWS environment. We’ll use a simple example of a web application hosted on EC2 with an RDS backend, demonstrating how to implement these principles with Terraform. Each step will be illustrated with an architecture diagram, gradually building a complete picture of a secure cloud infrastructure.

If you want to get stuck in straight away, you can find the full Terraform code and the GitHub Actions workflow (which includes Checkov for automated security checks) in my GitHub repository.

By the time you're done reading this, you'll have a solid understanding of Zero Trust and the hands-on knowledge to build a secure AWS environment yourself.

Let's dive in. 🚀

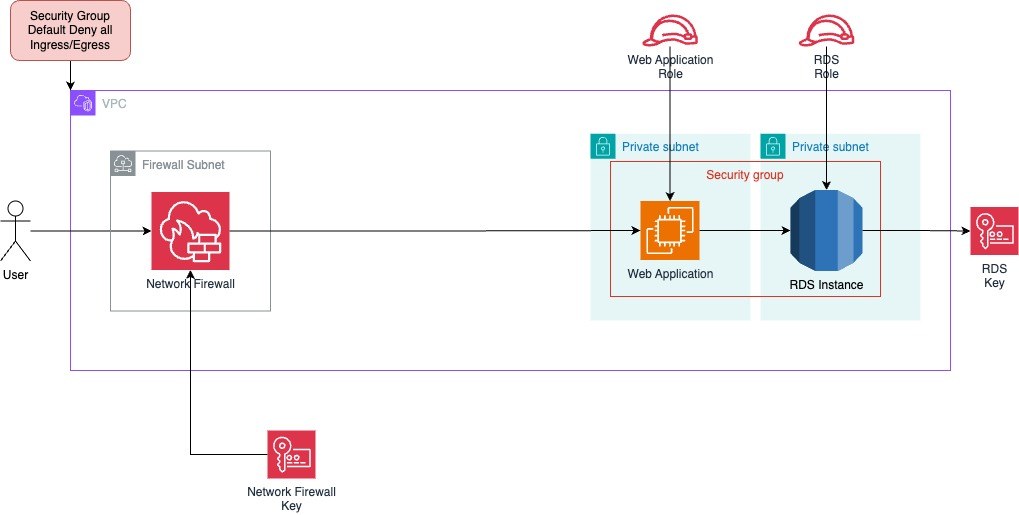

Principle #1 – Distrust Everything

Imagine walking into a high-security facility where nobody assumes you belong there. Every time you enter a room, you need to show your ID, prove you have permission, and justify why you need access. That’s exactly how Zero Trust works. The “Distrust Everything” principle assumes that no entity should be trusted by default.

The idea is simple: nothing is allowed until it is explicitly verified and permitted. This forces organizations to define exactly what is acceptable, minimizing the risk of unauthorized access and unintentional security gaps. Unlike traditional security models that assume everything inside a network is trustworthy, Zero Trust ensures that every connection, every request, and every action is carefully checked before it’s allowed.

Implementing Distrust in AWS

In our AWS setup, we apply Distrust Everything by enforcing strict default-deny policies across the entire infrastructure. This means:

- No inbound or outbound traffic is allowed by default. Every communication path must be explicitly approved.

- Firewalls and security groups enforce granular access rules. Instead of relying on broad trust zones, each service must validate every connection.

- Authentication and authorization happen at every layer. Even internal traffic must be authenticated before access is granted.

For our web application running on EC2 with an RDS backend, this principle translates into several key security measures:

- Using AWS Network Firewall to inspect and filter all traffic at the network level.

- Restricting access between VPC subnets so that only necessary services can communicate.

- Configuring strict security group rules to ensure that only the right users and systems can access specific services.

- Enforcing encryption with AWS Key Management Service to prevent unauthorized interception of network traffic.

By doing this, we eliminate implicit trust and force every interaction to prove its legitimacy before being allowed.

Drawbacks and Challenges

While Distrust Everything is a powerful security principle, it doesn’t come without its challenges.

- Operational Complexity: A strict Zero Trust model requires careful planning to ensure that legitimate users and services are not unintentionally blocked.

- Increased Management Overhead: Defining explicit access policies for every resource can be time-consuming, especially in large-scale environments.

- Potential Productivity Disruptions: If rules are too restrictive, teams may struggle to access the resources they need, leading to friction between security and operations teams.

To mitigate these issues, organizations should take an iterative approach, starting with a strong foundation and refining rules over time based on monitoring insights.

By questioning every connection and validating every request, we’ve established a foundation of strict security. Now, let's take this concept a step further by exploring how Least Privilege ensures that even verified entities only get the access they truly need.

Principle #2 – Least Privilege

Imagine leaving the front door of your house wide open and handing out keys to everyone, just in case they might need access someday. That’s what happens when permissions in an AWS environment are overly permissive. The Least Privilege principle is all about flipping that approach on its head. Instead of granting broad access by default, you give out only the minimum permissions required to get the job done. This drastically reduces the attack surface, limiting the potential damage if an account is compromised or an internal mistake is made.

Why Does This Feel Like a Déjà Vu?

If this principle feels familiar, it's because it shares a family resemblance with "Distrust Everything." Both aim to reduce risk by limiting access and questioning assumptions of trust. "Distrust Everything" makes entities prove they belong each time, while "Least Privilege" ensures they can only do what they absolutely need to do. It's like seeing a familiar face in a crowd. You recognize the similarities, but each has its unique role.

This overlap is especially clear in AWS, where both principles rely on setting strict boundaries and granting permissions only when explicitly required. IAM Roles play a key part in both concepts, providing temporary, scoped permissions that reinforce Zero Trust by ensuring that even verified entities can only access what they need. This strategic use of roles creates a system where déjà vu isn't a sign of redundancy. It's a sign of layered, reinforced security.

Implementing Least Privilege in AWS

In AWS, Identity and Access Management (IAM) is the backbone of enforcing least privilege. With IAM, you can set exact permissions at different levels, like users, groups, roles, and even temporary credentials based on the session. But implementing it effectively requires discipline and attention to detail.

For our web application this principle means tightly controlling who and what can access our resources:

- EC2 Instances should only have permissions that are absolutely necessary, each instance should assume an IAM role with granular policies.

- RDS Databases only specific EC2 instances should have database access through IAM authentication or secure credentials.

By default, IAM users and roles should have zero permissions until explicitly granted. This forces us to be deliberate about what actions are truly needed.

Tightening IAM Permissions

AWS IAM policies are great, but they're easy to get wrong. You can make them too restrictive or too permissive. Here are some ways to refine and strengthen your IAM strategy:

1. Use IAM Condition Keys

Condition keys let you fine-tune access control based on factors like IP address, device type, or time of day. For instance, you might enforce access to certain resources only when requests come from within your corporate VPN. This ensures that even if credentials are leaked, unauthorized users from outside your network can’t use them.

2. Leverage IAM Session Policies

Instead of granting static long-term permissions, session policies allow for dynamically scoped access. These temporary permissions can be assigned at runtime and are ideal for short-lived access needs.

3. Enforce MFA (Multi-Factor Authentication)

For human users, requiring MFA drastically improves security. Even if credentials are compromised, an attacker would still need a second factor (such as a hardware token or mobile app) to authenticate.

4. Enable IAM Access Analyzer

AWS IAM Access Analyzer can help detect overly permissive policies by flagging unintended public access or excessive cross-account permissions.

Finding the Right Balance

- Start with AWS Managed Policies (where applicable) – These are maintained by AWS and are a safer starting point than writing broad custom policies.

- Use service-linked roles – AWS services often provide pre-configured roles that follow best practices.

- Regularly review and refine permissions – Over time, unused permissions should be removed to prevent privilege creep.

- IAM Roles for EC2

- IAM Condition Keys

- IAM Session Policies

- MFA in IAM

- IAM Access Analyzer

- RDS and IAM Integration

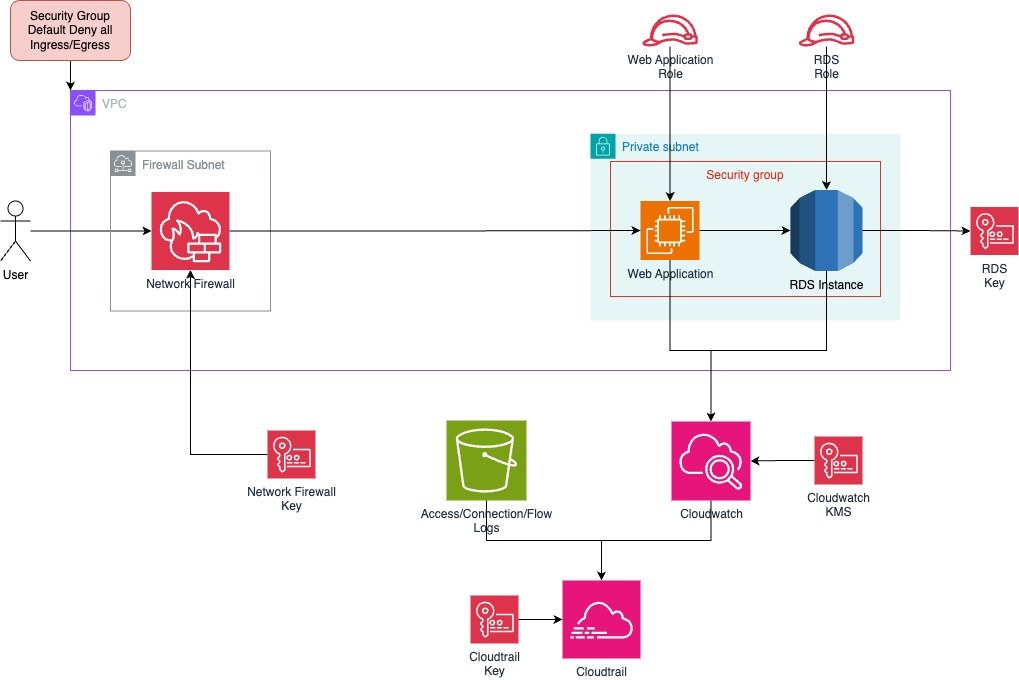

Principle #3: Continuous Monitoring

A perfectly locked-down environment today could become vulnerable tomorrow due to misconfigurations, new threats, or even insider risks. That’s why Continuous Monitoring is a critical part of a Zero Trust strategy. Instead of relying on static security measures, we need real-time visibility into our infrastructure, ensuring that every event, login, and network request is logged, analyzed, and, when necessary, acted upon.

Keeping an Eye on Everything

Continuous Monitoring means having a system in place that tracks activity across your AWS environment and raises the alarm if something looks off. It involves multiple layers of logging, alerting, and automated responses to potential threats. Without proper monitoring, an attacker could move undetected for weeks, quietly escalating privileges or exfiltrating data. In our AWS Zero Trust setup, we focus on three key monitoring tools:

- AWS CloudTrail – Tracks API calls and records every action taken in your account. This includes user logins, permission changes, resource modifications, and more.

- Amazon CloudWatch – Collects logs and metrics from your infrastructure, enabling real-time monitoring, alerts, and dashboards.

- S3 Logging Buckets – Securely stores logs with encryption, versioning, and lifecycle policies to prevent tampering.

These services work together to create a complete picture of what’s happening in the environment.

Implementing Continuous Monitoring in AWS

Let’s break it down with practical steps. In our web application with EC2 and RDS, we want to ensure that all major actions are logged and monitored.

1. Enable AWS CloudTrail for Audit Logs

CloudTrail acts as a security camera for your AWS environment. It records every API call, whether made by users, applications, or AWS services. To ensure proper monitoring, create a multi-region trail that logs events into an S3 bucket with encryption enabled.

2. Set Up CloudWatch for Real-Time Alerts

While CloudTrail logs everything, CloudWatch helps you react to specific events in real time. You can define alarms and automate responses for security-sensitive activities. For example, you might want to trigger an alert if an IAM policy is modified or if an RDS database is accessed from an unexpected IP.

3. Store Logs Securely in S3

Logs are valuable, but only if they remain intact. Attackers often try to erase their footprints, which is why securing your logs is just as important as collecting them. To prevent tampering, enable versioning and Object Lock on your CloudTrail S3 bucket. This makes sure logs cannot be deleted or modified.

Challenges of Continuous Monitoring

While monitoring is essential, it also comes with challenges. Log data can quickly become overwhelming, especially in large-scale environments. Without proper log retention policies and filtering mechanisms, costs can spiral out of control, and teams can drown in noisy alerts. To mitigate this, consider:

- Using AWS Config to track compliance and alert on misconfigurations.

- Forwarding logs to a Security Information and Event Management (SIEM) system for deeper analysis.

- Implementing automated responses with AWS Lambda, such as revoking access for a compromised IAM user.

Real-time visibility helps us detect suspicious behavior, but preventing lateral movement within the network is just as crucial. The next step is to implement Micro-Segmentation to limit attackers' ability to move through the infrastructure.

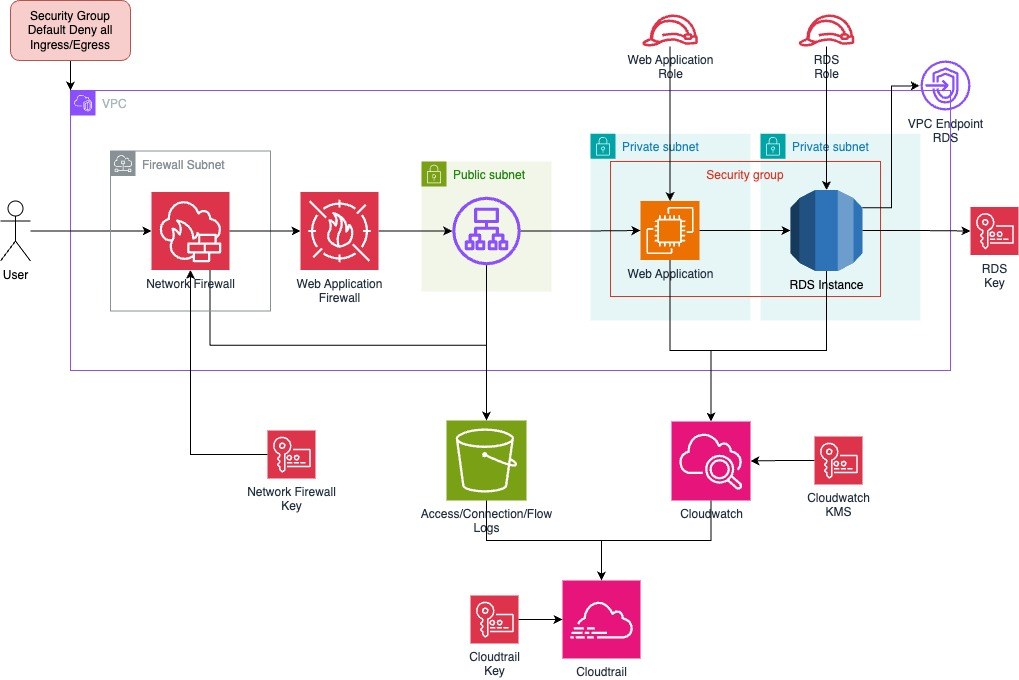

Principle #4: Micro-Segmentation

Network security isn’t just about keeping bad actors out. Even with the best perimeter defenses, an attacker who gets inside should not have free rein to move across the infrastructure. That’s where Micro-Segmentation comes into play. Instead of treating the network as one big open space, we carve it into smaller, isolated segments with strict controls over how they communicate.

This approach limits the potential damage if a breach occurs. An attacker who compromises one part of the infrastructure won't be able to move freely to other services or databases. Micro-segmentation enforces Zero Trust at the network level, ensuring that resources communicate only when explicitly allowed.

Structuring a Segmented AWS Environment

In our AWS setup, we break down the infrastructure into well-defined segments, each with clear rules about who can talk to whom. At a high level, we use:

- Public and Private Subnets – Public subnets handle external-facing services, while private subnets restrict internal workloads from being directly accessible.

- Application Load Balancer (ALB) – This provides a controlled entry point for traffic, ensuring that even within the segmented network, access is tightly managed.

- Web Application Firewall (WAF) – Adds another layer of protection by filtering out malicious traffic before it even reaches the application.

- VPC Endpoint for RDS – Ensures that traffic to and from the database never leaves the AWS internal network, reducing exposure and eliminating the need for a public IP on the RDS instance.

With security groups and fine-grained IAM policies, we ensure that each tier can only reach what it absolutely needs. No direct access to databases from the internet. No lateral movement between unrelated services. No over-permissive security groups allowing unrestricted inbound traffic.

Challenges and Considerations

While micro-segmentation significantly improves security, it does introduce some complexity. Managing multiple security groups, network ACLs, and segmentation rules requires careful planning. Here are a few things to keep in mind:

- Misconfigurations can break things – A wrongly configured security group might block legitimate traffic, causing unexpected downtime. Regular testing and monitoring help avoid this.

- Balancing security and flexibility – While it’s tempting to lock everything down, over-segmentation can lead to operational headaches. The key is finding a balance between security and maintainability.

- Logging and monitoring are crucial – Since segmentation rules are strict, monitoring rejected traffic is just as important as tracking allowed traffic. AWS VPC Flow Logs provide insights into what’s being blocked and why.

While segmentation limits network access, true Zero Trust requires verifying every request at the identity level as well. Next, we’ll explore how Zero Trust Access ensures that only authenticated and authorized requests are allowed—no matter where they originate.

Principle #5: Zero Trust Access

In a Zero Trust model, we assume that attackers may already be inside. With compromised credentials, misconfigurations, or insider threats. That means we can’t rely on network boundaries alone. Instead, we need to verify every request, every time. Zero Trust Access ensures that every request is authenticated, authorized, and contextually evaluated before it reaches sensitive resources. This applies to users, services, and even API calls.

Implementing OIDC Authentication with ALB

A key step toward Zero Trust is enforcing authentication at the Application Load Balancer (ALB) level. By configuring OpenID Connect (OIDC) with an external identity provider, you ensure only authenticated requests ever reach your backend services. Here’s the basic flow:

- Incoming Request – A user attempts to access your application through the ALB.

- Check Authentication – The ALB checks whether there’s a valid session cookie indicating the user has been authenticated already. If not, it redirects the user to the Identity Provider (IdP)—such as AWS Cognito, Auth0, or Okta.

- User Login – The IdP verifies the user's credentials. Upon successful login, it issues an ID token (JWT) and redirects the user back to the ALB.

- Session Cookie Creation – The ALB creates or updates a session cookie for the user, so subsequent requests don’t trigger additional logins.

- Forward Request – The ALB forwards the now-authenticated request to your backend (such as EC2 instances or a containerized service).

Beyond ALB: Enforcing Access at the API Level

While ALB authentication is a great first step, some applications require more granular access controls at the API level. AWS provides multiple ways to achieve this:

- API Gateway with IAM Authentication – Each API request must be signed with valid IAM credentials.

- JWT-based Authorization – Enforce authentication and authorization directly within API requests.

- AWS Verified Access – A more advanced Zero Trust access management service for securing applications without a VPN.

These approaches allow you to enforce per-request authentication and authorization, ensuring that every API call is properly validated.

Challenges of Zero Trust Access

Enforcing strict access controls comes with trade-offs. Some key challenges include:

- Initial setup complexity – Configuring authentication and authorization layers can require additional setup, especially when integrating with external identity providers.

- Balancing security with user experience – If access policies are too strict, they can frustrate users. Single sign-on (SSO) solutions help streamline authentication without weakening security.

- Managing temporary credentials – Short-lived access tokens improve security but require careful management to avoid breaking automated workflows.

Despite these challenges, the benefits of Zero Trust Access far outweigh the difficulties. By ensuring that every request is verified and authorized, we eliminate reliance on outdated perimeter-based security models.

- OIDC Authentication with ALB

- Amazon Cognito

- Auth0 Documentation

- JWT-Based Auth with API Gateway

- AWS Verified Access

Conclusion

Building a Zero Trust architecture in AWS is not just about implementing a few security controls. It’s about changing the mindset from implicit trust to continuous verification. We started with the core idea that traditional security models are no longer sufficient, and we broke down the five key principles that form the foundation of Zero Trust:

- Distrust Everything – Every request, device, and user must be verified before being granted access. We applied this by enforcing strict default-deny policies at the network level.

- Least Privilege – Every component should have only the permissions it absolutely needs. We enforced this with IAM roles, fine-grained policies, and short-lived credentials.

- Continuous Monitoring – Security is an ongoing process, not a one-time setup. Using AWS CloudTrail, CloudWatch, and logging buckets, we ensured every action is logged and analyzed.

- Micro-Segmentation – Breaking the network into isolated sections minimizes lateral movement. We structured our infrastructure with public and private subnets and WAF protections.

- Zero Trust Access – Authentication and authorization must happen on every request, not just at login. By integrating OIDC authentication at the ALB level, we ensured that only verified users could access sensitive workloads.

The Road Ahead

So, zero trust isn't just a product, it's an ongoing strategy that adapts as threats change. This blog post outlined a practical approach for securing an AWS environment, but there’s always room to refine and enhance security measures. Future improvements might include:

- AI-driven security policies that automatically adjust based on real-time threats.

- Deeper integration with AWS Verified Access for more granular, context-aware authorization.

- Automated incident response using serverless security tools like AWS Lambda.

The key takeaway? Security is never "done." By continuously refining policies, monitoring activity, and adapting to new threats, organizations can stay ahead of attackers and keep their cloud environments secure.

Call to Action 🚀

Now it’s your turn. Experiment, build, and break things in a secure way. Try implementing these Zero Trust principles in your own AWS environment and see how they fit into your security strategy.

For the complete Terraform code examples and CI/CD workflow using Checkov, check out my GitHub repository. Everything you need to get started with a Zero Trust setup is there.

I’d love to hear your thoughts! If you have questions, feedback, or ideas, please reach out. Let’s keep the conversation going and refine these security practices together.