Manage a unified LLM API Platform with LiteLLM

veröffentlicht am 22.09.2025 von Lucas Brüning

Identify the advantages and challenges of a multi-model provider architecture and how these challenges can be solved with an open-source software called LiteLLM. Explore the different possibilities and features of LiteLLM and how centralizing your model providers helps you to manage users, permissions, and costs. Learn how you can shorten the development time of your agentic workflows and applications while increasing their flexibility.

Use cases for multiple LLMs

In the last couple of years, a massive number of new LLMs with different sizes and capabilities came to the market. This directly affects our decision to choose the right LLMs for our workflows. It might be tempting to just choose the latest and greatest model of a known LLM provider such as OpenAI or Anthropic for the sake of simplicity and for a quick decision-making process. While this might be a good fit for smaller, simpler, and single domain use cases, the usage of just one LLM might face limitations in more complex workloads. Such an example would be using multiple AI-Agents to replicate an automated development process, which can look like this:

In this example, we got a total of three Agents:

- Planner Agent: for receiving a user’s input and planning the according development tasks and delegating them to the web and / or coding agent.

- Coding Agent: for code generation and editing files or executing commands, communicating with the web agent.

- Web Agent: for searching the web for documentation or other information, communicating with the coding agent.

In such a use case it is advisable to think about using a different task-optimized LLM for every agent. For example, you could use the Claude model family for code generation while using one of the Perplexity-AI models to search the web. For planning, you could use a smaller OpenAI model for cost-efficiency. In this way it is possible to gain better results and potentially saving costs at the same time.

This example can be extended and changed in various ways. Maybe you include some kind of image or even video analysis with Google's Gemini, or you want specific agent tasks to be done by a European model provider such as Mistral AI. Maybe you want to leverage open source on-premises models for compliance reasons or to increase cost-efficiency or performance with fine-tuned small language models (SLMs). Using multiple models also helps in achieving resiliency and scalability requirements. In case of an outage, you can failover to different cloud or on-premises models or use them to load balance across different providers.

Using a broad, task-specific set of models may increase the efficiency and the performance of your workflows. But with the growing number of different models you use in your workflows, you automatically use multiple LLM providers in addition to your potential on-premises models. Such a cross-provider architecture will come with its own challenges in using, tracking, and maintaining workflows.

The challenges with multiple LLM providers

Over the last couple of years, a lot of new model providers came up, including a wide variety of model offerings in different sizes and for different use cases. This enables task specific model usage in an agentic workflow. Although a lot of model choice is nice, it leads to the usage of multiple providers. Even the usage of a few providers can cause different problems. The most common among these problems are the different API specifications, the management of users, and model cost tracking.

Different API-Specifications

Most LLM providers, LLM-Applications and local LLM inference software are implementing standardized API endpoints and data exchange formats, that comply with OpenAI’s API standards, making them so called “OpenAI-compatible”. This allows developers to use different providers without changing the payload to use the API. Although the OpenAI-compatibility got established, it might still be necessary to change parts of an application to deal with different provider endpoints or different authentication mechanisms. The application might also need to use different SDKs for each model provider if developers desire an SDK instead of bare calls via the HTTP protocol.

User management

Using multiple model providers means that every provider features its own user management. Every member of a team needs one account for every used LLM provider. In addition to that, you may want to use one or more API-Keys per user or per application to leverage a separation of concerns approach. But even with different API-Keys for different concerns, it might be hard to build a solid access management with current providers. Some providers don’t allow you to grant API-Keys permission to only be used on specific models. In this case every user with an API-Key can utilize every possible model of a provider, even the most expensive ones. The usage of on-premises models makes the user management even harder because you must develop or maintain a system for user and API-key management on your own.

Generally, the user management becomes decentralized, making it hard to keep track of the current users, current active API-Keys and the different permissions of the API-Keys if they are manageable at all.

Difficult cost tracking

Decentralization is also a challenge when it comes to cost tracking across different providers. Often it is only possible to track costs on a per-provider and per API-Key basis. This results in a lack of transparency when it comes to tracking costs of your application or agentic workflow. It is also not possible to put proper budgets in a multi-provider architecture. While most providers do support putting budgets on a per API-Key and a per account basis, it is hard to accumulate the budgets across them, causing inefficient cost usage for your workflows. In addition to that, it is also challenging to track and monitor the usage of each model and get an overview of the total processed LLM-tokens. This would be especially useful when running on-premises LLMs, because the token usage directly correlates with the resource usage and thus the costs. As a result, this allows to put a proper price estimate on on-premises LLMs.

How LiteLLM solves these challenges

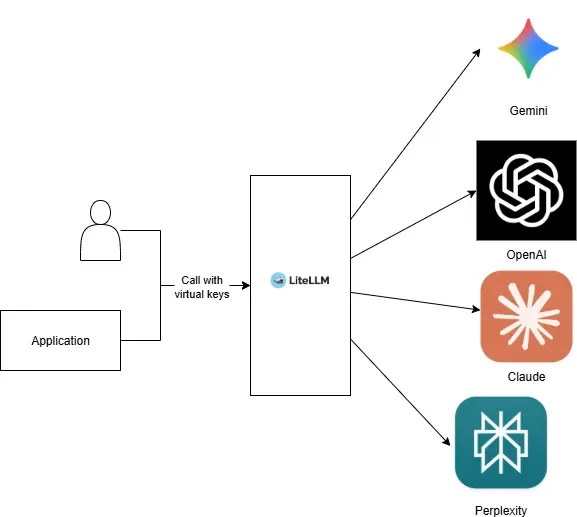

To face the challenge of decentralized LLM providers we need some kind of gateway to recentralize our access to the different models of our providers. This is the reason why the Open-Source project LiteLLM was created. Its core function is to act as a centralized gateway where all APIs from your LLM providers converge and are “merged” into a single OpenAI-compatible API.

LiteLLM supports all major providers such as OpenAI, Azure, Anthropic, Google's Gemini, and a lot more. It also supports on-premises LLMs served by vLLM or Ollama. The configuration is done via a single config file where you provide the different endpoints, settings, and available models of your used LLM providers.

Apart from the unified gateway, it also provides solutions for the other challenges we discussed, such as user and API-key management and a centralized, more detailed cost-tracking approach. These two features will be discussed throughout this blog article in more detail. The open-source version LiteLLM also has a variety of other useful features for an LLM-TechStack, such as load balancing between models, guardrails, response caching with vector databases or management of MCP-Servers. It also integrates other software and services, such as Langfuse, DataDog, Weights & Biases, or OpenTelemetry.

There is also an offering for LiteLLM directed to enterprises, which contains more features such as SSO, grouping teams into organizations, audit logging and more fine-grained security and compliance controls.

A unified API for all your LLM providers

The core functionality of LiteLLM is to provide one OpenAI-compatible API endpoint for multiple models and LLM providers. LiteLLM does this by using your providers’ credentials to call the different provider-APIs. The software uses a lookup table to determine the supported API parameters and the costs of the different models. On a high level, the architecture for the unified API looks like this:

Since LiteLLM provides a single OpenAI-compatible API, very few software changes are needed because popular SDKs and libraries already expect an OpenAI-compatible interface. LiteLLM itself also provides an SDK to call model APIs via the gateway. It is also easier to switch between different models across providers in your application because most of the time the model name in the API-call is the only part to change when choosing a different model.

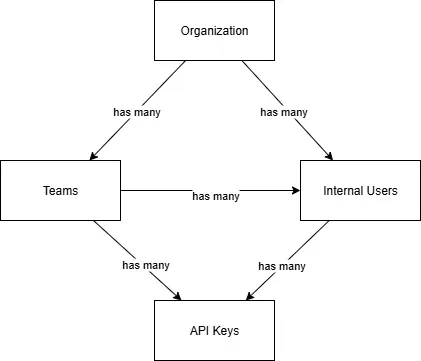

User self-service and internal user management

It is possible to leverage the built-in user management of LiteLLM to create different users with different permissions and thus limit access to specific models. Once a user is created, it can generate multiple “virtual” API-keys which are then used to authenticate towards the LiteLLM gateway and make API-calls to your desired providers. You can also leverage the API of LiteLLM to programmatically create virtual API-keys or users, this is especially useful if you want to provide an automated system for user creation. For enterprises LiteLLM supports managing users via SSO through Microsoft Entra ID as well.

It is also possible to group multiple users into teams and group teams into an organization, this provides a way to represent your company structure and provides an easier access control mechanism because it is possible to set permissions and allowed models on a per-team or per-organization basis as well. The hierarchy system in LiteLLM looks like this:

This means that an organization can hold multiple teams or users. A team can hold multiple users, and a user or a team can have multiple virtual API-keys. In total, this streamlines and simplifies the user management and user creation when working with multiple LLM providers.

LiteLLM budgets and cost tracking

Because every request goes through your gateway, it is easier to track the costs and usages of your models. It is possible to track costs on a per-user, virtual key, team, or organization basis. On every part of the hierarchy, a budget can be set as well. LiteLLM even supports a “global” budget across every existing team or organization. Budgets can have reset periods, making it possible to reset the budget on a scheduled basis, which is useful if you want to reset developers or users budgets every month. Budgets can also be used as an alerting metric, which is helpful to set up alerts when exceeding a budget threshold.

Most model providers disclose their model costs publicly. In this case, the costs are calculated by tracking your used and generated tokens and multiplying the amount with the cost factor of the model, which are saved in a lookup table by LiteLLM. It is possible to set up your own pricing, which is then used for the cost calculation. This is especially useful when you plan to use on-premises LLMs and want to put a price tag on their token usage.

Summary

We discussed a potential simple agentic architecture using multiple models, emphasizing the importance of integrating different LLM providers into the architecture. While using multiple models and providers can improve the results and cost-effectiveness of your agentic workflow, it also raises new challenges, including different API-platforms, decentralized user management, and difficult cost tracking. These challenges become especially difficult when you include the usage of on-premises models in addition to LLMs offered in the cloud.

The challenges can be solved by recentralizing the API-platform via an open-source software called LiteLLM that provides various useful features for an LLM-stack. These features include centralized user and API-key management with a possibility to create an IAM hierarchy, better model usage and cost tracking across all your providers and putting custom price tags on on-premises models. It also offers the ability to create an OpenAI-compatible API that merges all your used LLM providers into just a single API.